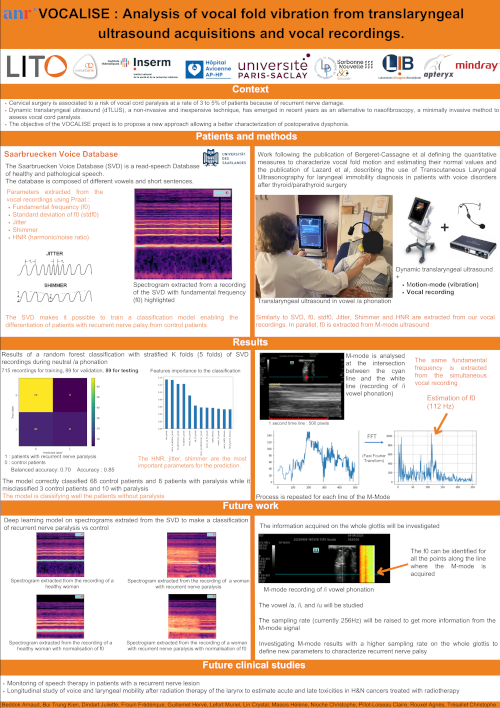

Characterization of VOCAL fold motion and monItoring of Speech thErapy using dynamic translaryngeal ultrasound

Dynamic translaryngeal ultrasound (dTLUS), a non-invasive and inexpensive technique, has emerged in recent years as an alternative to nasofibroscopy, a minimally invasive method to assess vocal cord paralysis.

The objective of VOCALISE is to propose a new approach allowing a better characterization of postoperative or radiation-induced dysphonia. It consists in associating to the dTLUS optimized acquisitions of the vibration of each vocal cord during phonation simultaneously with voice/speech recordings.

Data acquired for the clinical trial VOCALE will be used to test our new software components for assessing vocal fold mobility. The global approach will also be evaluated to monitor speech therapy in patients with recurrent nerve injury (clinical trial in the process of approval) and to qualify radiation-induced dysphonia in patients with Head and Neck cancers treated with radiotherapy.

For this project, four academic partners: LITO, LIB, LPP and Avicenne Hospital (Departments of Surgery and Nuclear Medicine) have joined forces with two industrial partners: Mindray Medical France and Apteryx. A collaboration with the Godinot Institute in Reims is underway.

People involved in the lab: Arnaud Beddok, Trung Kien Bui, Juliette Dindart, Frédérique Frouin, Agnès Rouxel. Alumini: Lydia Abdemeziem, Fahad Khalid

Publications : hal-04623096v1

https://www.icvpb-2024.de/documents/8/Program_ICVPB2024.pdf pp 38-39 & pp 41-42

Project funded by ANR (ANR-22-CE19-0035)

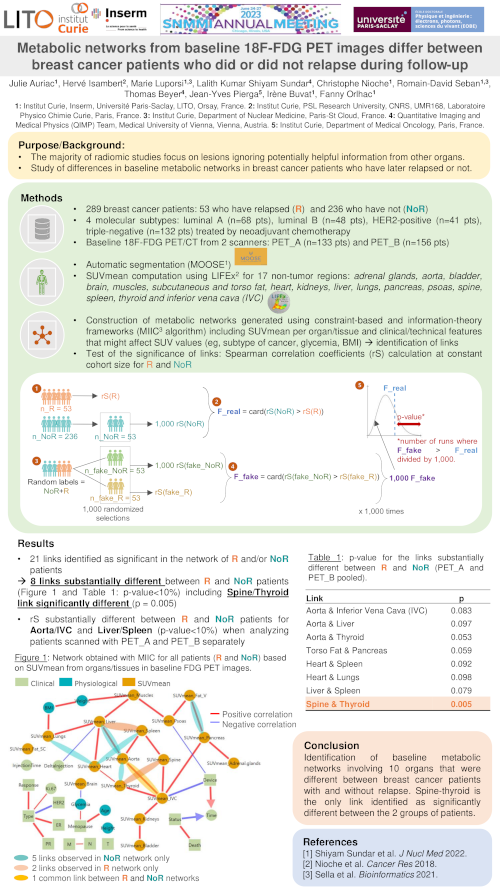

An international research project (Inter-Organ-PET) funded by the 2022 Joint ANR-FWF Projects Scheme between the Agence National de la Recherche (ANR) and the Austrian Science Fun (FWF) for “Identifying metabolic networks using inter-organ analysis of whole-body [18F]FDG-PET imaging data”

(FWF I-6174B)

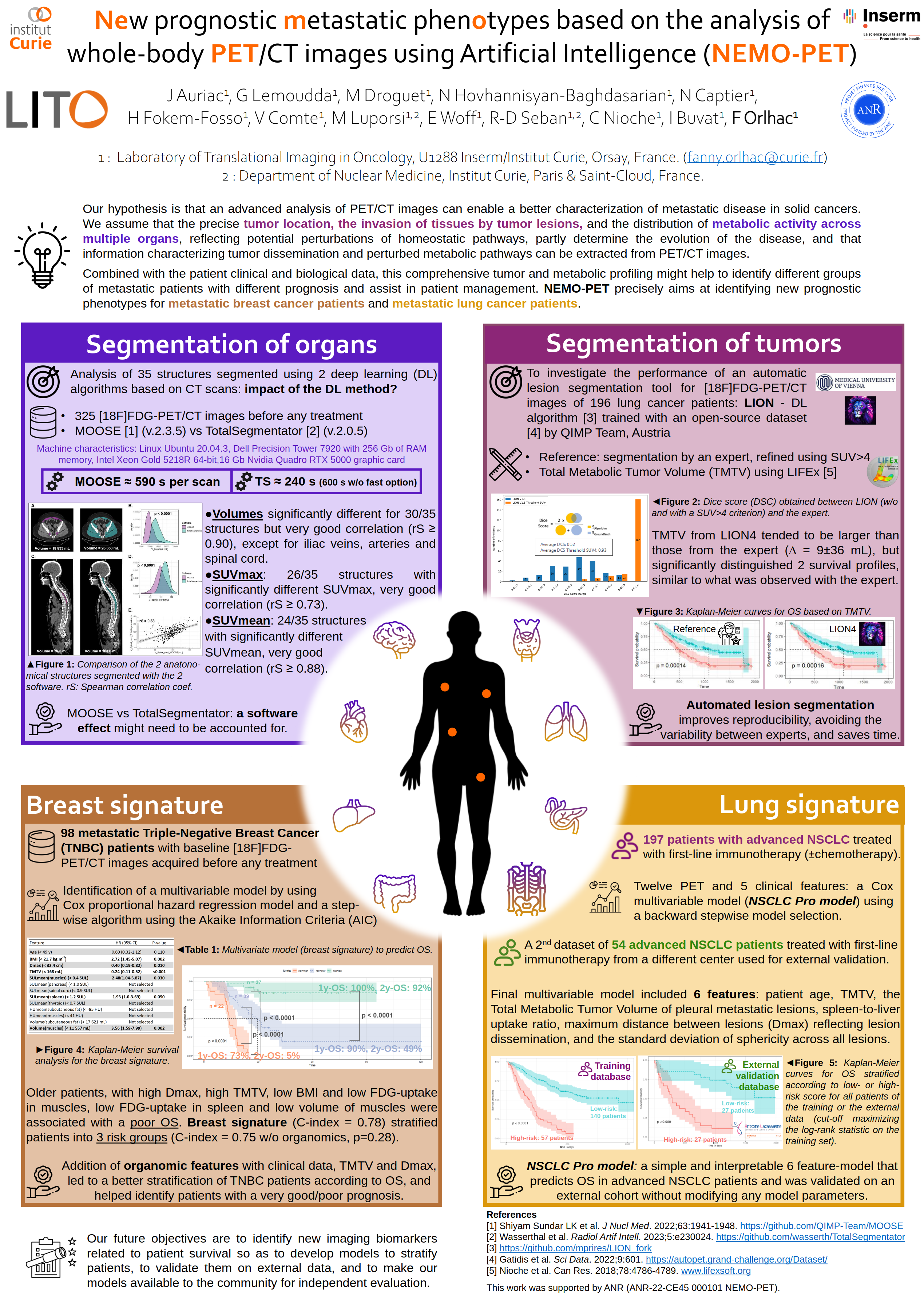

This project seeks to utilize whole-body molecular imaging by means of [18F]FDG-PET to assess inter-organ effects in breast cancer patients in comparison to normal, homeostatic pathways, and thus, help build prediction models for better breast cancer patient management.

The AI.DReAM project, funded by BPI France, brings together a consortium of 9 partners (GE Healthcare, 4 SMEs and start-ups, 3 clinical partners, and LITO as the only academic laboratory). The project aims to accelerate the development and market access of Artificial Intelligence applications in medical imaging. The role of our laboratory is to carry out the necessary methodological developments to ensure the quality control, the robustness of the radiomic models (classical or deep) and their ability to produce reliable results on a wide variety of images. To evaluate our approaches, we work with the clinicians from Institut Curie and clinical partners of the consortium, which are the AP-HP, Gustave Roussy, and the Hôpital Saint Joseph in Paris.

People involved in the lab: Irène Buvat (PI for the lab), Frédérique Frouin, Kibrom Girum, Caroline Malhaire, Fanny Orlhac, Paul Steinmetz.

Radiomic and artificial intelligence models will be easier to apply in a clinical context if they are explainable and provide an estimate of the confidence associated with the result they produce. We are therefore working on the development of interpretable radiomic models, which can be designed from a limited amount of data (from less than a hundred patients), with the aim of highlighting biological mechanisms from the models and/or verifying the results produced by the models. In particular, we are developing these methods in the context of patients treated by radiotherapy to determine whether the identification of subregions responsible for resistance to treatment or recurrence would allow the dose delivery plan to be modified to combat these poor outcomes.

People involved in the laboratory: Fanny Orlhac, Irène Buvat, Frédérique Frouin, Christophe Nioche, Thibault Escobar (PhD student), Hamid Mammar, Laurence Champion, Romain-David Seban, Claire Provost

Publication :

- Escobar T, Vauclin S, Orlhac F, Nioche C, Pineau P, Champion L, Brisse H, Buvat I. Voxel-wise supervised analysis of tumors with multimodal engineered features to highlight interpretable biological patterns. Med Phys. 49(6):3816-3829, 2022. DOI: 10.1002/mp.15603

This work is supported by Dosisoft.